|

市场调查报告书

商品编码

1876622

Transformer优化型AI晶片市场机会、成长驱动因素、产业趋势分析及2025-2034年预测Transformer-Optimized AI Chip Market Opportunity, Growth Drivers, Industry Trend Analysis, and Forecast 2025 - 2034 |

||||||

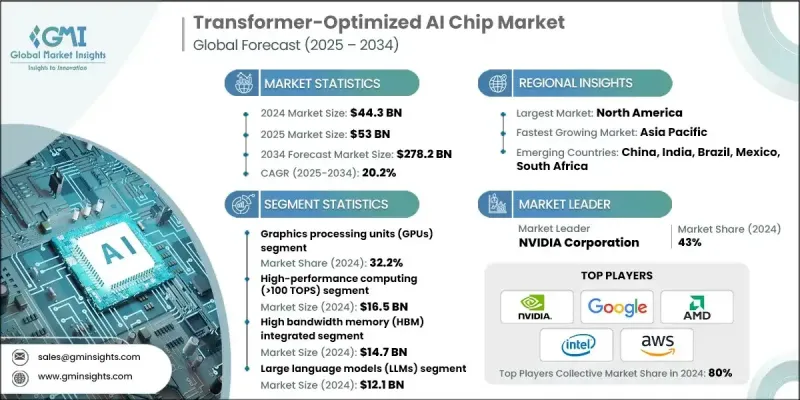

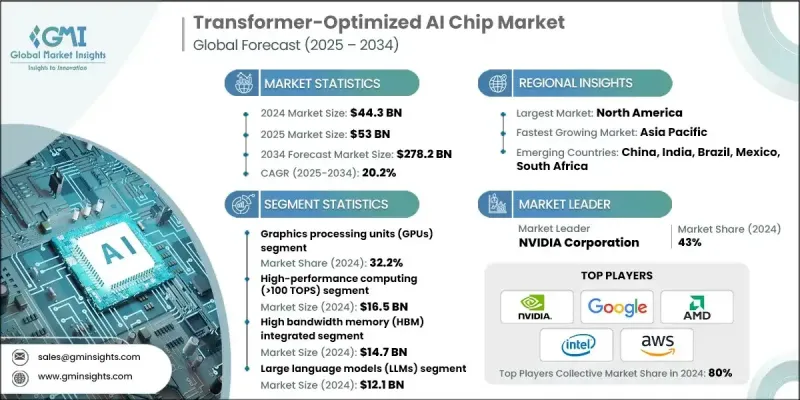

2024 年全球 Transformer 优化型 AI 晶片市值为 443 亿美元,预计到 2034 年将以 20.2% 的复合年增长率增长至 2782 亿美元。

随着各行业对专用硬体的需求日益增长,市场正经历快速增长,这些硬体旨在加速基于Transformer的架构和大型语言模型(LLM)的运算。在AI训练和推理工作负载中,高吞吐量、低延迟和高能源效率至关重要,而这些晶片正变得不可或缺。采用Transformer优化运算单元、高频宽记忆体和先进互连技术的领域特定架构的兴起,正在推动下一代AI生态系统的广泛应用。云端运算、边缘AI和自主系统等领域正在整合这些晶片,以处理即时分析、生成式AI和多模态应用。晶片整合和领域特定加速器的出现正在改变AI系统的扩展方式,从而实现更高的性能和效率。同时,记忆体层次结构和封装技术的进步正在降低延迟并提高运算密度,使Transformer能够更靠近处理单元运作。这些进步正在重塑全球AI基础设施,Transformer优化晶片正处于高性能、高能源效率和可扩展AI处理的核心地位。

| 市场范围 | |

|---|---|

| 起始年份 | 2024 |

| 预测年份 | 2025-2034 |

| 起始值 | 443亿美元 |

| 预测值 | 2782亿美元 |

| 复合年增长率 | 20.2% |

到2024年,图形处理器(GPU)市占率将达到32.2%。 GPU之所以被广泛采用,是因为其生态系统成熟、平行运算能力强大,并且在执行基于Transformer的工作负载方面久经考验。 GPU能够为大型语言模式的训练和推理提供大量吞吐量,这使其成为金融、医疗保健和云端服务等行业不可或缺的工具。凭藉其灵活性、广泛的开发者支援和高运算密度,GPU仍然是资料中心和企业环境中人工智慧加速的基础。

2024年,算力超过100 TOPS的高效能运算(HPC)市场规模将达到165亿美元,占37.2%的市占率。这些晶片对于训练需要极高并行性和吞吐量的大型Transformer模型至关重要。 HPC级处理器被部署在人工智慧驱动的企业、超大规模资料中心和研究机构中,用于处理诸如复杂的多模态人工智慧、大批量推理以及涉及数十亿参数的LLM训练等高要求应用。它们对加速运算工作负载的贡献,使HPC晶片成为人工智慧创新和基础设施可扩展性的基石。

2024年,北美变压器优化型人工智慧晶片市占率达到40.2%。该地区的领先地位源于云端服务提供者、人工智慧研究实验室以及政府支持的促进国内半导体生产的倡议的大量投资。晶片设计商、代工厂和人工智慧解决方案提供商之间的紧密合作持续推动着市场成长。主要技术领导者的存在以及对人工智慧基础设施建设的持续投入,正在增强北美在高效能运算和基于变压器的技术领域的竞争优势。

全球Transformer优化型AI晶片市场的主要参与者包括NVIDIA公司、英特尔公司、AMD公司、三星电子有限公司、Google(Alphabet公司)、微软公司、特斯拉公司、高通科技公司、百度公司、华为科技有限公司、阿里巴巴集团、亚马逊网路服务公司、苹果公司、Cerebras Systems、GraphcoreN、SiMa、Astoren、SiMa. Systems、Astoren、Spaunran、Astoren、SiMama、Mythic、Sambau Systems、Sambaui、SiMa.这些领先企业正致力于创新、策略联盟和扩大生产规模,以巩固其全球地位。各公司正大力投资研发,以打造针对Transformer和LLM工作负载优化的节能高效、高吞吐量晶片。与超大规模资料中心、云端服务供应商和AI新创公司的合作正在促进运算生态系统的整合。许多企业正透过将软体框架与硬体解决方案结合,实现垂直整合,从而提供完整的AI加速平台。

目录

第一章:方法论与范围

第二章:执行概要

第三章:行业洞察

- 产业生态系分析

- 产业影响因素

- 成长驱动因素

- 对LLM和Transformer架构的需求不断增长

- 人工智慧训练和推理工作负载的快速成长

- Transformer 计算的特定领域加速器

- 边缘和分散式变压器部署

- 先进的封装与记忆体层次结构创新

- 产业陷阱与挑战

- 高昂的开发成本与研发复杂性

- 热管理和功率效率限制

- 市场机会

- 扩展到大型语言模型(LLM)和生成式人工智慧

- 边缘和分散式人工智慧部署

- 成长驱动因素

- 成长潜力分析

- 监管环境

- 北美洲

- 我们

- 加拿大

- 欧洲

- 亚太地区

- 拉丁美洲

- 中东和非洲

- 北美洲

- 技术格局

- 当前趋势

- 新兴技术

- 管道分析

- 未来市场趋势

- 波特的分析

- PESTEL 分析

第四章:竞争格局

- 介绍

- 公司市占率分析

- 全球的

- 北美洲

- 欧洲

- 亚太地区

- 拉丁美洲

- 中东和非洲

- 公司矩阵分析

- 主要市场参与者的竞争分析

- 竞争定位矩阵

- 关键进展

- 併购

- 伙伴关係与合作

- 新产品发布

- 扩张计划

第五章:市场估算与预测:依晶片类型划分,2021-2034年

- 主要趋势

- 神经处理单元(NPU)

- 图形处理单元(GPU)

- 张量处理单元(TPU)

- 专用积体电路(ASIC)

- 现场可程式闸阵列(FPGA)

第六章:市场估算与预测:依性能等级划分,2021-2034年

- 主要趋势

- 高效能运算(>100 TOPS)

- 中等性能(10-100 TOPS)

- 边缘/移动性能(1-10 TOPS)

- 超低功耗(<1 TOPS)

第七章:市场估算与预测:依记忆体容量划分,2021-2034年

- 主要趋势

- 高频宽记忆体 (HBM) 集成

- 片上SRAM优化

- 记忆体处理(PIM)

- 分散式记忆体系统

第八章:市场估算与预测:依应用领域划分,2021-2034年

- 主要趋势

- 大型语言模型(LLM)

- 计算机视觉 Transformer (ViTs)

- 多模态人工智慧系统

- 生成式人工智慧应用

- 其他的

第九章:市场估算与预测:依最终用途划分,2021-2034年

- 主要趋势

- 科技与云端服务

- 汽车与运输

- 医疗保健与生命科学

- 金融服务

- 电信

- 工业与製造业

- 其他的

第十章:市场估计与预测:依地区划分,2021-2034年

- 主要趋势

- 北美洲

- 我们

- 加拿大

- 欧洲

- 德国

- 英国

- 法国

- 义大利

- 西班牙

- 荷兰

- 亚太地区

- 中国

- 印度

- 日本

- 澳洲

- 韩国

- 拉丁美洲

- 巴西

- 墨西哥

- 阿根廷

- 中东和非洲

- 南非

- 沙乌地阿拉伯

- 阿联酋

第十一章:公司简介

- Advanced Micro Devices (AMD)

- Alibaba Group

- Amazon Web Services

- Apple Inc.

- Baidu, Inc.

- Cerebras Systems, Inc.

- Google (Alphabet Inc.)

- Groq, Inc.

- Graphcore Ltd.

- Huawei Technologies Co., Ltd.

- Intel Corporation

- Microsoft Corporation

- Mythic AI

- NVIDIA Corporation

- Qualcomm Technologies, Inc.

- Samsung Electronics Co., Ltd.

- SiMa.ai

- SambaNova Systems, Inc.

- Tenstorrent Inc.

- Tesla, Inc.

The Global Transformer-Optimized AI Chip Market was valued at USD 44.3 billion in 2024 and is estimated to grow at a CAGR of 20.2% to reach USD 278.2 billion by 2034.

The market is witnessing rapid growth as industries increasingly demand specialized hardware designed to accelerate transformer-based architectures and large language model (LLM) operations. These chips are becoming essential in AI training and inference workloads where high throughput, reduced latency, and energy efficiency are critical. The shift toward domain-specific architectures featuring transformer-optimized compute units, high-bandwidth memory, and advanced interconnect technologies is fueling adoption across next-generation AI ecosystems. Sectors such as cloud computing, edge AI, and autonomous systems are integrating these chips to handle real-time analytics, generative AI, and multi-modal applications. The emergence of chiplet integration and domain-specific accelerators is transforming how AI systems scale, enabling higher performance and efficiency. At the same time, developments in memory hierarchies and packaging technologies are reducing latency while improving computational density, allowing transformers to operate closer to processing units. These advancements are reshaping AI infrastructure globally, with transformer-optimized chips positioned at the center of high-performance, energy-efficient, and scalable AI processing.

| Market Scope | |

|---|---|

| Start Year | 2024 |

| Forecast Year | 2025-2034 |

| Start Value | $44.3 Billion |

| Forecast Value | $278.2 Billion |

| CAGR | 20.2% |

The graphics processing unit (GPU) segment held a 32.2% share in 2024. GPUs are widely adopted due to their mature ecosystem, strong parallel computing capability, and proven effectiveness in executing transformer-based workloads. Their ability to deliver massive throughput for training and inference of large language models makes them essential across industries such as finance, healthcare, and cloud-based services. With their flexibility, extensive developer support, and high computational density, GPUs remain the foundation of AI acceleration in data centers and enterprise environments.

The high-performance computing (HPC) segment exceeding 100 TOPS segment generated USD 16.5 billion in 2024, capturing a 37.2% share. These chips are indispensable for training large transformer models that require enormous parallelism and extremely high throughput. HPC-class processors are deployed across AI-driven enterprises, hyperscale data centers, and research facilities to handle demanding applications such as complex multi-modal AI, large-batch inference, and LLM training involving billions of parameters. Their contribution to accelerating computing workloads has positioned HPC chips as a cornerstone of AI innovation and infrastructure scalability.

North America Transformer-Optimized AI Chip Market held a 40.2% share in 2024. The region's leadership stems from substantial investments by cloud service providers, AI research labs, and government-backed initiatives promoting domestic semiconductor production. Strong collaboration among chip designers, foundries, and AI solution providers continues to propel market growth. The presence of major technology leaders and continued funding in AI infrastructure development are strengthening North America's competitive advantage in high-performance computing and transformer-based technologies.

Prominent companies operating in the Global Transformer-Optimized AI Chip Market include NVIDIA Corporation, Intel Corporation, Advanced Micro Devices (AMD), Samsung Electronics Co., Ltd., Google (Alphabet Inc.), Microsoft Corporation, Tesla, Inc., Qualcomm Technologies, Inc., Baidu, Inc., Huawei Technologies Co., Ltd., Alibaba Group, Amazon Web Services, Apple Inc., Cerebras Systems, Inc., Graphcore Ltd., SiMa.ai, Mythic AI, Groq, Inc., SambaNova Systems, Inc., and Tenstorrent Inc. Leading companies in the Transformer-Optimized AI Chip Market are focusing on innovation, strategic alliances, and manufacturing expansion to strengthen their global presence. Firms are heavily investing in research and development to create energy-efficient, high-throughput chips optimized for transformer and LLM workloads. Partnerships with hyperscalers, cloud providers, and AI startups are fostering integration across computing ecosystems. Many players are pursuing vertical integration by combining software frameworks with hardware solutions to offer complete AI acceleration platforms.

Table of Contents

Chapter 1 Methodology and Scope

- 1.1 Market scope and definition

- 1.2 Research design

- 1.2.1 Research approach

- 1.2.2 Data collection methods

- 1.3 Data mining sources

- 1.3.1 Global

- 1.3.2 Regional/Country

- 1.4 Base estimates and calculations

- 1.4.1 Base year calculation

- 1.4.2 Key trends for market estimation

- 1.5 Primary research and validation

- 1.5.1 Primary sources

- 1.6 Forecast model

- 1.7 Research assumptions and limitations

Chapter 2 Executive Summary

- 2.1 Industry 3600 synopsis

- 2.2 Key market trends

- 2.2.1 Chip types trends

- 2.2.2 Performance class trends

- 2.2.3 Memory trends

- 2.2.4 Application trends

- 2.2.5 End use trends

- 2.2.6 Regional trends

- 2.3 CXO perspectives: Strategic imperatives

- 2.3.1 Key decision points for industry executives

- 2.3.2 Critical success factors for market players

- 2.4 Future outlook and strategic recommendations

Chapter 3 Industry Insights

- 3.1 Industry ecosystem analysis

- 3.2 Industry impact forces

- 3.2.1 Growth drivers

- 3.2.1.1 Rising demand for LLMs and transformer architectures

- 3.2.1.2 Rapid growth of AI training and inference workloads

- 3.2.1.3 Domain-specific accelerators for transformer compute

- 3.2.1.4 Edge and distributed transformer deployment

- 3.2.1.5 Advanced packaging and memory-hierarchy innovations

- 3.2.2 Industry pitfalls and challenges

- 3.2.2.1 High Development Costs and R&D Complexity

- 3.2.2.2 Thermal Management and Power Efficiency Constraints

- 3.2.3 Market opportunities

- 3.2.3.1 Expansion into Large Language Models (LLMs) and Generative AI

- 3.2.3.2 Edge and Distributed AI Deployment

- 3.2.1 Growth drivers

- 3.3 Growth potential analysis

- 3.4 Regulatory landscape

- 3.4.1 North America

- 3.4.1.1 U.S.

- 3.4.1.2 Canada

- 3.4.2 Europe

- 3.4.3 Asia Pacific

- 3.4.4 Latin America

- 3.4.5 Middle East and Africa

- 3.4.1 North America

- 3.5 Technology landscape

- 3.5.1 Current trends

- 3.5.2 Emerging technologies

- 3.6 Pipeline analysis

- 3.7 Future market trends

- 3.8 Porter's analysis

- 3.9 PESTEL analysis

Chapter 4 Competitive Landscape, 2024

- 4.1 Introduction

- 4.2 Company market share analysis

- 4.2.1 Global

- 4.2.2 North America

- 4.2.3 Europe

- 4.2.4 Asia Pacific

- 4.2.5 Latin America

- 4.2.6 Middle East and Africa

- 4.3 Company matrix analysis

- 4.4 Competitive analysis of major market players

- 4.5 Competitive positioning matrix

- 4.6 Key developments

- 4.6.1 Merger and acquisition

- 4.6.2 Partnership and collaboration

- 4.6.3 New product launches

- 4.6.4 Expansion plans

Chapter 5 Market Estimates and Forecast, By Chip Type, 2021 - 2034 ($ Bn)

- 5.1 Key trends

- 5.2 Neural Processing Units (NPUs)

- 5.3 Graphics Processing Units (GPUs)

- 5.4 Tensor Processing Units (TPUs)

- 5.5 Application-Specific Integrated Circuits (ASICs)

- 5.6 Field-Programmable Gate Arrays (FPGAs)

Chapter 6 Market Estimates and Forecast, By Performance Class, 2021 - 2034 ($ Bn)

- 6.1 Key trends

- 6.2 High-Performance Computing (>100 TOPS)

- 6.3 Mid-Range Performance (10-100 TOPS)

- 6.4 Edge/Mobile Performance (1-10 TOPS)

- 6.5 Ultra-Low Power (<1 TOPS)

Chapter 7 Market Estimates and Forecast, By Memory, 2021 - 2034 ($ Bn)

- 7.1 Key trends

- 7.2 High Bandwidth Memory (HBM) Integrated

- 7.3 On-Chip SRAM Optimized

- 7.4 Processing-in-Memory (PIM)

- 7.5 Distributed Memory Systems

Chapter 8 Market Estimates and Forecast, By Application, 2021 - 2034 ($ Bn)

- 8.1 Key trends

- 8.2 Large Language Models (LLMs)

- 8.3 Computer Vision Transformers (ViTs)

- 8.4 Multimodal AI Systems

- 8.5 Generative AI Applications

- 8.6 Others

Chapter 9 Market Estimates and Forecast, By End Use, 2021 - 2034 ($ Bn)

- 9.1 Key trends

- 9.2 Technology & Cloud Services

- 9.3 Automotive & Transportation

- 9.4 Healthcare & Life Sciences

- 9.5 Financial Services

- 9.6 Telecommunications

- 9.7 Industrial & Manufacturing

- 9.8 Others

Chapter 10 Market Estimates and Forecast, By Region, 2021 - 2034 ($ Bn)

- 10.1 Key trends

- 10.2 North America

- 10.2.1 U.S.

- 10.2.2 Canada

- 10.3 Europe

- 10.3.1 Germany

- 10.3.2 UK

- 10.3.3 France

- 10.3.4 Italy

- 10.3.5 Spain

- 10.3.6 Netherlands

- 10.4 Asia Pacific

- 10.4.1 China

- 10.4.2 India

- 10.4.3 Japan

- 10.4.4 Australia

- 10.4.5 South Korea

- 10.5 Latin America

- 10.5.1 Brazil

- 10.5.2 Mexico

- 10.5.3 Argentina

- 10.6 Middle East and Africa

- 10.6.1 South Africa

- 10.6.2 Saudi Arabia

- 10.6.3 UAE

Chapter 11 Company Profiles

- 11.1 Advanced Micro Devices (AMD)

- 11.2 Alibaba Group

- 11.3 Amazon Web Services

- 11.4 Apple Inc.

- 11.5 Baidu, Inc.

- 11.6 Cerebras Systems, Inc.

- 11.7 Google (Alphabet Inc.)

- 11.8 Groq, Inc.

- 11.9 Graphcore Ltd.

- 11.10 Huawei Technologies Co., Ltd.

- 11.11 Intel Corporation

- 11.12 Microsoft Corporation

- 11.13 Mythic AI

- 11.14 NVIDIA Corporation

- 11.15 Qualcomm Technologies, Inc.

- 11.16 Samsung Electronics Co., Ltd.

- 11.17 SiMa.ai

- 11.18 SambaNova Systems, Inc.

- 11.19 Tenstorrent Inc.

- 11.20 Tesla, Inc.