|

市场调查报告书

商品编码

1836526

微型伺服器:市场份额分析、行业趋势、统计数据和成长预测(2025-2030 年)Micro Server - Market Share Analysis, Industry Trends & Statistics, Growth Forecasts (2025 - 2030) |

||||||

※ 本网页内容可能与最新版本有所差异。详细情况请与我们联繫。

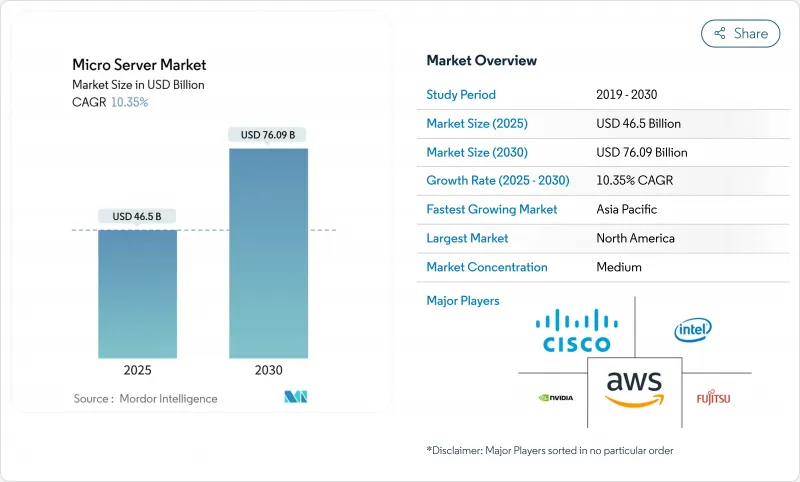

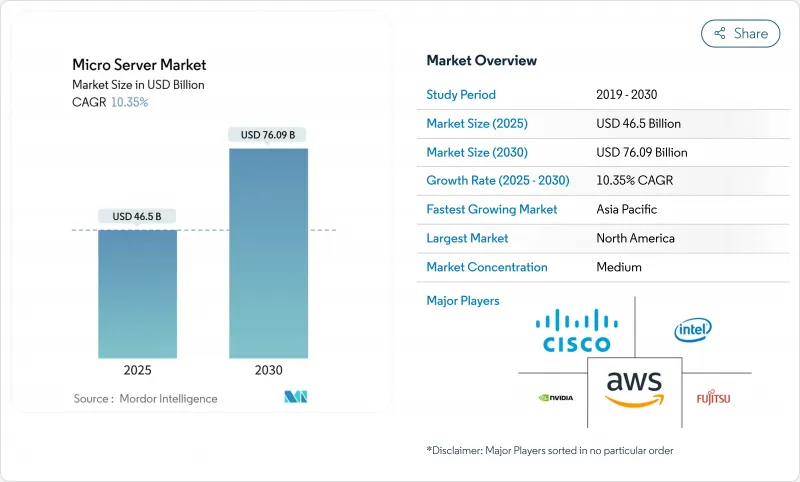

预计微型伺服器市场规模将在 2025 年达到 465 亿美元,并在 2030 年达到 760.9 亿美元,在此期间的复合年增长率为 10.4%。

资料中心空间的快速密集化、对支援人工智慧推理的低功耗计算节点的需求以及能源效率要求是关键驱动因素。供应商竞争涵盖现有的 x86 伺服器製造商、设计客製化晶片的云端服务供应商,以及承诺提供更高每瓦效能的新 ARM 架构参与企业。虽然硬体仍然主导着采购预算,但随着企业采用异质架构,託管服务正在快速成长。从地区来看,北美在超大规模资料中心投资方面领先,而亚太地区则在中小企业数位化和 5G 部署的推动下,正经历最快的成长。

全球微型伺服器市场趋势与洞察

超大规模和边缘云端建设激增

超大规模资料中心营运商正在标准化工厂整合的高密度伺服器,以缩短引进週期并提高单位运算功耗指标。 Infrastructure Mason 正在提议建造多千兆瓦园区式“清洁能源园区”,而 Lansheim 则正在规划可能达到 6 千兆瓦的资料中心。电信业者也将同样的逻辑应用于城域边缘站点,将微型资料中心设在 5G 节点附近,以满足低于 10 毫秒的延迟目标。因此,超大规模资料中心的经济性与边缘接近性的结合,已牢固确立了微型伺服器市场作为平衡密度、成本和能源效率的首选平台的地位。

人工智慧推理工作负载需要高密度、低功耗的节点

如今,推理导向的流量主导着许多生产级 AI 堆迭,推动伺服器设计朝着记忆体频宽和加速器整合的方向发展,而非单纯的 CPU 频率。亚马逊网路服务 (AWS) 的 Graviton 4 基于 Arm Neoverse V2 构建,集成了 96 个核心和 12 通道 DDR5-5600,在降低消费量的同时,将推理延迟控制在预算范围内。戴尔的 4U PowerEdge XE9680L 整合了八个 NVIDIA Blackwell GPU,并采用直接液冷技术,在标准机架内提供高每瓦效能。这些蓝图突显了一个架构轴心:微型伺服器必须整合能够高效移动资料并在丛集中分配推理工作负载的加速器,而不仅仅是加速运算。

碎片化外形规格和 I/O 标准化

儘管开放运算专案 (OCP) 制定了 M-XIO 和模组化硬体系统 (MODH) 规范,但电源引脚、PCIe 通道和频宽介面的差异使得不同供应商之间的互换变得复杂。这使得企业不得不拥有多个备用库存和客製化的管理堆迭,从而削弱了规模经济效益。缺乏即插即用的互通性也减缓了可安装在通用背板上的第三方加速器模组的开发速度。在真正的标准化出现之前,预先认证互通性或捆绑全面支援合约的供应商将占据优势。

报告中分析的其他驱动因素和限制因素

- 新兴市场中小企业数位化热潮

- 5G 和物联网的推出推动了边缘运算的需求

- 从 x86 到 Arm/RISC-V 的软体移植成本高

細項分析

到2024年,按组件分類的微型伺服器市场规模(硬体)将达到305.5亿美元(占65.6%),证实了超大规模和边缘资料中心的资本密集型更新週期。紧随其后的是服务市场,规模将达到159.7亿美元,到2030年复合年增长率将达到11.9%,反映出企业依赖管理基础设施来协调架构异质性。这些支出中的大部分将用于人工智慧机架设计、液冷维修和远端机群编配。

硬体收益得益于高密度1U双节点伺服器和整合Arm、x86和客製化ASIC的4U GPU托架的持续出货。戴尔在2025年第一季的AI优化伺服器出货量达29亿美元,这标誌着硬体週期的强劲成长。服务成长主要源自于对远端BIOS配置、容器编配和生命週期安全性修补程式的需求,多重云端团队的外包业务也日益增加。提供咨询、韧体客製化和微型伺服器丛集全天候支援的供应商正在获得稳定的年金收入,并缓解资本预算的波动。

1U 至 4U框架单位由于与现有通道布局和标准化电源的兼容性,将在 2024 年占据微型伺服器市场份额的 60.1%。然而,随着通讯业者和工业营运商在受限区域部署运算设备,坚固型边缘箱的复合年增长率将达到 11.6%,远超传统底盘。许多设计都采用可前置的浸没式散热板和 -48V 直流输入,与室外 5G 机柜保持一致。

随着 OEM 厂商将网路、AI 加速器和备用电池预先整合机壳鞋盒大小的机箱中,模组化微型伺服器市场正在不断扩张。 Vicor 支援的参考设计显示,与典型的机架节点相比,每次推理操作的能耗降低了 35%,这对于电网容量有限的地区来说极具吸引力。同时,多节点微云固态伺服器在 3U 机架中配备八个单插槽主机板,可在不牺牲可维护性的情况下平衡更高的机架密度。

区域分析

预计到2024年,北美将累计微型伺服器市场的37.5%,营收将达到174.4亿美元。乔治亚公共服务委员会目前要求高负载客户预付电网升级成本,推动资料中心营运商转向更节能的微伺服器节点。联邦政府对人工智慧加速器的出口限制进一步鼓励在美国组装和测试,巩固了本地价值保留。

欧洲正受到严格的能源效率和网路弹性立法的推动。最新版《能源效率指令》要求IT负载超过100千瓦的资料中心每年提交报告,而《数位营运弹性法案》则要求金融公司执行时间和安全性。这些规定将推动对微型伺服器的需求,微型伺服器每千瓦的运算能力更高,使营运商无需连接新电网即可实现其能源效率目标。

亚太地区是成长最快的地区,预计复合年增长率将达到 11.2%,这得益于 5G 密集化和中小企业云端运算应用的整合。仁宝电脑与 Kalyani 集团签署了一份谅解备忘录,将在印度生产伺服器,这符合旨在实现计算价值链本地化的「印度製造」奖励。东协和南亚各国政府正在推动国内资料託管,以提高数位服务对 GDP 的贡献,这为潮湿气候和有限能源优化的区域微型伺服器设计铺平了道路。

其他福利:

- Excel 格式的市场预测 (ME) 表

- 3个月的分析师支持

目录

第一章 引言

- 研究假设和市场定义

- 调查范围

第二章调查方法

第三章执行摘要

第四章 市场状况

- 市场概况

- 市场驱动因素

- 超大规模和边缘云端建设激增

- 人工智慧推理工作负载需要高密度、低功耗的节点

- 新兴市场中小企业数位化热潮

- 5G 和物联网部署将增加对边缘运算的需求

- 资料中心能源效率和碳排放税强制要求

- 将国防级超紧凑型伺服器重新安置到「值得信赖的」供应链

- 市场限制

- 外形规格和 I/O 标准的细分。

- 从 x86 到 Arm/RISC-V 的软体移植成本高

- 先进处理器出口管制的不确定性

- 开放原始码RISC-V 生态系成熟缓慢

- 价值链分析

- 监管状况

- 技术展望

- 波特五力分析

- 供应商的议价能力

- 买方的议价能力

- 新进入者的威胁

- 替代品的威胁

- 竞争对手之间的竞争

- 投资分析

- 评估宏观经济趋势对市场的影响

第五章市场规模及成长预测(金额)

- 按组件

- 硬体

- 服务

- 外形规格

- 机架(1U-4U)

- 多节点微云

- 模组化坚固边缘盒

- 按用途

- 资料中心

- 云端处理

- 媒体/内容存储

- 数据分析与人工智慧

- 物联网/工业边缘

- 按最终用户

- 大公司

- 中小企业

- 按地区

- 北美洲

- 美国

- 加拿大

- 墨西哥

- 欧洲

- 德国

- 英国

- 法国

- 义大利

- 西班牙

- 其他欧洲国家

- 亚太地区

- 中国

- 日本

- 印度

- 韩国

- 澳洲

- 其他亚太地区

- 南美洲

- 巴西

- 阿根廷

- 其他南美

- 中东和非洲

- 中东

- 沙乌地阿拉伯

- 阿拉伯聯合大公国

- 土耳其

- 其他中东地区

- 非洲

- 南非

- 埃及

- 奈及利亚

- 其他非洲国家

- 北美洲

第六章 竞争态势

- 市场集中度

- 策略趋势

- 市占率分析

- 公司简介

- Dell Technologies

- Lenovo

- Foxconn

- Ampere Computing

- Advanced Micro Devices

- Huawei

- Cisco Systems

- Hewlett Packard Enterprise

- Quanta Computer

- Inventec

- AWS(Graviton)

- Nvidia

- Fujitsu

- Penguin Computing

- Super Micro Computer

- Wistron

- Gigabyte Technology

- Intel

- Marvell

- NEC

- Plat'Home

第七章 市场机会与未来展望

The micro server market size currently stands at USD 46.50 billion in 2025 and is forecast to climb to USD 76.09 billion by 2030, reflecting a 10.4% CAGR over the period.

Rapid densification of data-center footprints, demand for low-power compute nodes to support AI inference, and tightening energy-efficiency mandates are the primary tailwinds. Vendor competition spans established x86 server makers, cloud providers designing custom silicon, and new ARM-based entrants that promise higher performance per watt. Hardware continues to dominate procurement budgets, yet managed services grow quickly as enterprises grapple with heterogeneous architectures. Regionally, North America leads on the back of hyperscale investments, while Asia-Pacific shows the fastest expansion because of SME digitalisation and 5G roll-outs.

Global Micro Server Market Trends and Insights

Surge in Hyperscale and Edge-Cloud Build-Outs

Hyperscale operators are standardising factory-integrated, high-density sleds that shorten deployment cycles and improve watt-per-compute metrics. Infrastructure Masons advocates campus-style "clean-energy parks" sized at multi-gigawatt scale, while Lancium plans sites that may reach 6 GW of capacity, illustrating how power availability now guides server architecture choices. Telecommunications companies extend the same logic to metro edge sites, installing micro data centres adjacent to 5G nodes to meet sub-10 millisecond latency targets; ruggedised micro servers allow rapid provisioning without full-scale facilities. Convergence of hyperscale economics with edge proximity therefore cements the micro server market as the preferred platform for balancing density, cost, and power efficiency.

AI Inference Workloads Require Dense, Low-Power Nodes

Inference-oriented traffic now dominates many production AI stacks, pushing server design toward memory bandwidth and accelerator integration over raw CPU frequency. Amazon Web Services' Graviton 4, built on Arm Neoverse V2, integrates 96 cores and 12-channel DDR5-5600 to keep inference latency within budget while trimming energy draw. Dell's 4U PowerEdge XE9680L packages eight NVIDIA Blackwell GPUs with direct liquid cooling, delivering high performance per watt inside standard racks. These blueprints underscore an architectural pivot: micro servers must move data efficiently rather than simply compute faster, embedding accelerators that disperse inference workloads across clusters.

Fragmented Form-Factor and I/O Standards

Despite the Open Compute Project's M-XIO and Modular Hardware System specifications, variance in power pins, PCIe lanes, and out-of-band interfaces complicates swapping sleds across vendors. Enterprises therefore juggle multiple spares inventories and bespoke management stacks, diluting economies of scale. Lack of plug-and-play interoperability also slows the creation of third-party accelerator modules that could otherwise ride a common backplane. Vendors that pre-certify cross-compatibility or bundle holistic support contracts are better positioned until true standardisation emerges.

Other drivers and restraints analyzed in the detailed report include:

- SME Digitalisation Boom in Emerging Markets

- Rising Edge-Computing Demand from 5G and IoT Roll-Outs

- High Software-Porting Cost from x86 to Arm/RISC-V

For complete list of drivers and restraints, kindly check the Table Of Contents.

Segment Analysis

The micro server market size by component reached USD 30.55 billion for hardware in 2024, equivalent to 65.6% share, confirming capital-intensive refresh cycles within hyperscale and edge facilities. Services followed at USD 15.97 billion but will expand at 11.9% CAGR through 2030, reflecting enterprise reliance on managed infrastructure to tame architectural heterogeneity. Much of the spend funneled into design-for-AI racks, liquid cooling retrofits, and remote fleet orchestration.

Hardware revenue is anchored by continued shipments of dense 1U twin-node sleds and 4U GPU trays that integrate Arm, x86, and custom ASICs. Dell shipped USD 2.9 billion in AI-optimised servers during 2025 Q1, a single-vendor signal of the hardware cycle's strength. Services growth stems from demand for remote BIOS provisioning, container orchestration, and lifecycle security patching-tasks that multicloud teams increasingly outsource. Vendors that wrap consulting, firmware customisation, and 24-hour support around micro server fleets capture sticky annuity streams, cushioning volatility in capital budgets.

Rack units between 1U and 4U captured 60.1% of micro server market share in 2024, owing to their fit with existing aisle layouts and standardised power feeds. However, rugged edge boxes are on track for an 11.6% CAGR, far outpacing legacy chassis as telecom and industrial players push compute to constrained sites. Many designs adopt front-serviceable soaked-plate cooling and -48 V DC inputs, aligning with outdoor 5G cabinets.

The micro server market size for modular boxes will rise as OEMs pre-integrate networking, AI accelerators, and battery backup into shoebox-scale enclosures. Vicor-backed reference designs show 35% lower energy use per inference operation compared with typical rack nodes, attractive where grid capacity is scarce. Meanwhile, multi-node microcloud sleds strike a balance, fitting eight single-socket boards into a 3U frame to boost rack density without sacrificing serviceability.

The Micro Server Market Report is Segmented by Component (Hardware and Services), Form Factor (Rack, Multi-Node Microcloud, and Modular Rugged Edge Box), Application (Data Centre, Cloud Computing, Media / Content Storage, and More), End-User (Large Enterprises and Small and Medium Enterprises (SMEs)), and Geography. The Market Forecasts are Provided in Terms of Value (USD).

Geography Analysis

North America generated USD 17.44 billion of revenue in 2024, equal to 37.5% of the micro server market, thanks to heavy hyperscale capex and government preference for defence-grade domestic supply chains. The Georgia Public Service Commission now obliges large-load customers to shoulder upfront grid-upgrade costs, nudging data-centre operators toward more energy-efficient micro server nodes. Federal export controls on AI accelerators further incentivise U.S.-based assembly and testing, solidifying local value retention.

Europe follows, propelled by stringent energy-efficiency and cyber-resilience laws. The updated Energy Efficiency Directive mandates annual reporting for data-centre sites above 100 kW IT load, while the Digital Operational Resilience Act compels financial firms to bolster uptime and security. These rules elevate demand for micro servers that deliver higher compute per kilowatt, aiding operators in meeting power-usage-effectiveness targets without new grid connections.

Asia-Pacific is the fastest-growing territory, forecast at 11.2% CAGR, as 5G densification and SME cloud adoption converge. Compal Electronics and Kalyani Group signed an MoU to manufacture servers in India, aligning with "Make in India" incentives aimed at localising the compute value chain. Governments across ASEAN and South Asia promote domestically hosted data to spur digital services GDP contributions, paving the way for region-specific micro server designs optimised for humid climates and limited utility power.

- Dell Technologies

- Lenovo

- Foxconn

- Ampere Computing

- Advanced Micro Devices

- Huawei

- Cisco Systems

- Hewlett Packard Enterprise

- Quanta Computer

- Inventec

- AWS (Graviton)

- Nvidia

- Fujitsu

- Penguin Computing

- Super Micro Computer

- Wistron

- Gigabyte Technology

- Intel

- Marvell

- NEC

- Plat'Home

Additional Benefits:

- The market estimate (ME) sheet in Excel format

- 3 months of analyst support

TABLE OF CONTENTS

1 INTRODUCTION

- 1.1 Study Assumptions and Market Definition

- 1.2 Scope of the Study

2 RESEARCH METHODOLOGY

3 EXECUTIVE SUMMARY

4 MARKET LANDSCAPE

- 4.1 Market Overview

- 4.2 Market Drivers

- 4.2.1 Surge in hyperscale and edge-cloud build-outs

- 4.2.2 AI inference workloads require dense, low-power nodes

- 4.2.3 SME digitalisation boom in emerging markets

- 4.2.4 Rising edge-computing demand from 5G and IoT roll-outs

- 4.2.5 Data-centre energy-efficiency and carbon-tax mandates

- 4.2.6 Reshoring to "trusted" supply chains for defence-grade micro-servers

- 4.3 Market Restraints

- 4.3.1 Fragmented form-factor and I/O standards

- 4.3.2 High software-porting cost from x86 to Arm/RISC-V

- 4.3.3 Export-control uncertainty on advanced processors

- 4.3.4 Slow maturity of open-source RISC-V ecosystems

- 4.4 Value Chain Analysis

- 4.5 Regulatory Landscape

- 4.6 Technological Outlook

- 4.7 Porter's Five Forces Analysis

- 4.7.1 Bargaining Power of Suppliers

- 4.7.2 Bargaining Power of Buyers

- 4.7.3 Threat of New Entrants

- 4.7.4 Threat of Substitutes

- 4.7.5 Intensity of Competitive Rivalry

- 4.8 Investment Analysis

- 4.9 Assessment of the Impact of Macroeconomic Trends on the Market

5 MARKET SIZE AND GROWTH FORECASTS (VALUE)

- 5.1 By Component

- 5.1.1 Hardware

- 5.1.2 Services

- 5.2 By Form Factor

- 5.2.1 Rack (1U-4U)

- 5.2.2 Multi-node Microcloud

- 5.2.3 Modular Rugged Edge Box

- 5.3 By Application

- 5.3.1 Data Centre

- 5.3.2 Cloud Computing

- 5.3.3 Media / Content Storage

- 5.3.4 Data Analytics and AI

- 5.3.5 IoT / Industrial Edge

- 5.4 By End-User

- 5.4.1 Large Enterprises

- 5.4.2 Small and Medium Enterprises (SMEs)

- 5.5 By Geography

- 5.5.1 North America

- 5.5.1.1 United States

- 5.5.1.2 Canada

- 5.5.1.3 Mexico

- 5.5.2 Europe

- 5.5.2.1 Germany

- 5.5.2.2 United Kingdom

- 5.5.2.3 France

- 5.5.2.4 Italy

- 5.5.2.5 Spain

- 5.5.2.6 Rest of Europe

- 5.5.3 Asia-Pacific

- 5.5.3.1 China

- 5.5.3.2 Japan

- 5.5.3.3 India

- 5.5.3.4 South Korea

- 5.5.3.5 Australia

- 5.5.3.6 Rest of Asia-Pacific

- 5.5.4 South America

- 5.5.4.1 Brazil

- 5.5.4.2 Argentina

- 5.5.4.3 Rest of South America

- 5.5.5 Middle East and Africa

- 5.5.5.1 Middle East

- 5.5.5.1.1 Saudi Arabia

- 5.5.5.1.2 United Arab Emirates

- 5.5.5.1.3 Turkey

- 5.5.5.1.4 Rest of Middle East

- 5.5.5.2 Africa

- 5.5.5.2.1 South Africa

- 5.5.5.2.2 Egypt

- 5.5.5.2.3 Nigeria

- 5.5.5.2.4 Rest of Africa

- 5.5.1 North America

6 COMPETITIVE LANDSCAPE

- 6.1 Market Concentration

- 6.2 Strategic Moves

- 6.3 Market Share Analysis

- 6.4 Company Profiles (includes Global level Overview, Market level overview, Core Segments, Financials as available, Strategic Information, Market Rank/Share for key companies, Products and Services, and Recent Developments)

- 6.4.1 Dell Technologies

- 6.4.2 Lenovo

- 6.4.3 Foxconn

- 6.4.4 Ampere Computing

- 6.4.5 Advanced Micro Devices

- 6.4.6 Huawei

- 6.4.7 Cisco Systems

- 6.4.8 Hewlett Packard Enterprise

- 6.4.9 Quanta Computer

- 6.4.10 Inventec

- 6.4.11 AWS (Graviton)

- 6.4.12 Nvidia

- 6.4.13 Fujitsu

- 6.4.14 Penguin Computing

- 6.4.15 Super Micro Computer

- 6.4.16 Wistron

- 6.4.17 Gigabyte Technology

- 6.4.18 Intel

- 6.4.19 Marvell

- 6.4.20 NEC

- 6.4.21 Plat'Home

7 MARKET OPPORTUNITIES AND FUTURE OUTLOOK

- 7.1 White-space and Unmet-Need Assessment