|

市场调查报告书

商品编码

1850107

高效能运算:市场占有率分析、产业趋势、统计数据和成长预测(2025-2030 年)High Performance Computing - Market Share Analysis, Industry Trends & Statistics, Growth Forecasts (2025 - 2030) |

||||||

※ 本网页内容可能与最新版本有所差异。详细情况请与我们联繫。

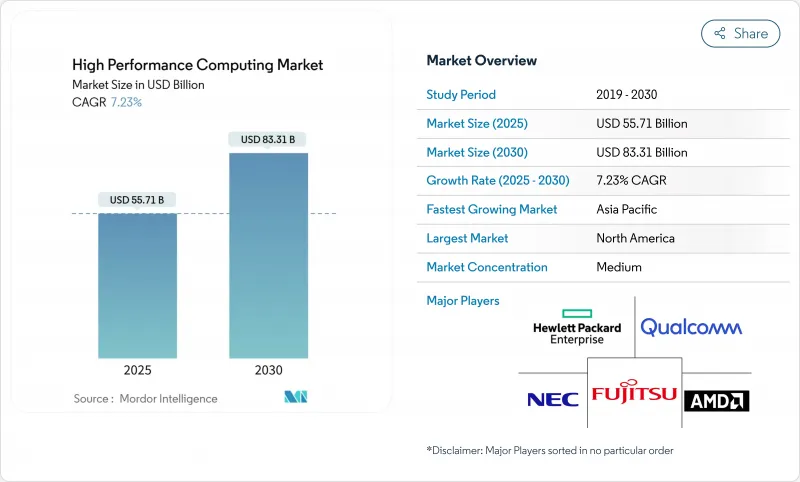

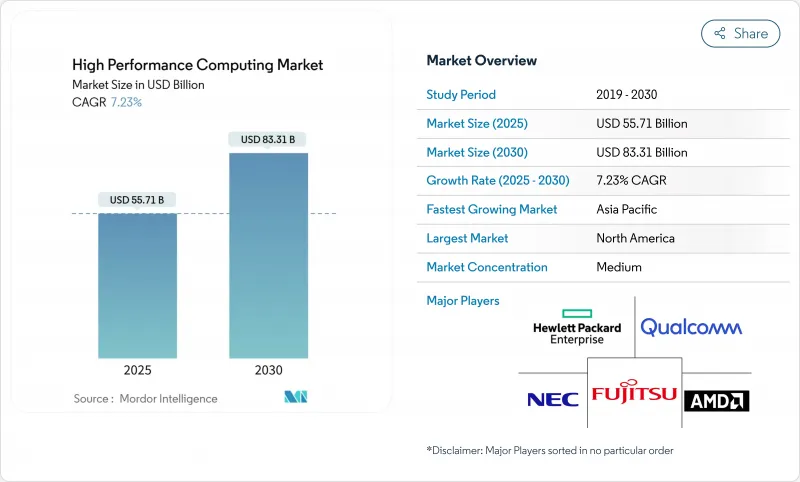

预计到 2025 年,高效能运算市场规模将达到 557 亿美元,到 2030 年将达到 833 亿美元,年复合成长率为 7.23%。

随着发展势头从纯粹的科学模拟转向以人工智慧为中心的工作负载,需求正转向配备丰富GPU的集群,以便在运行基于物理的程式码的同时训练底层模型。政府人工智慧专案使得政府采购人员与寻求相同加速系统的超大规模资料中心业者展开直接竞争,导致供应紧张,并增强了能够控制高密度功耗的液冷架构的吸引力。儘管硬体仍然是采购预算的核心,但随着各组织倾向于采用与难以预测的人工智慧需求曲线相匹配的计量收费模式,託管服务和高效能运算即服务(HPCaaS)正在迅速成长。其他市场驱动因素包括混合部署的成长、生命科学研发管线的加速发展以及促使资料中心重新设计的永续性要求。

全球高效能运算市场趋势与洞察

美国联邦实验室和一级云端服务供应商的人工智慧/机器学习训练工作负载呈爆炸性增长

联邦实验室目前正围绕混合人工智慧和模拟能力来设计采购方案,这实际上使高效能运算市场的潜在尖峰效能需求翻了一番。美国卫生与公众服务部已将人工智慧赋能的运算定位为其2025年研究策略的核心部分,鼓励实验室购买GPU密集型节点,以便在百万兆级模拟和万亿参数模型训练之间灵活切换。美国能源部已在2025财政年度拨款11.52亿美元用于人工智慧与高效能运算的整合。一级云端服务供应商正在积极回应,推出主权人工智慧区,将FIPS检验的安全措施与先进的加速器结合。产业追踪机构估计,2024年上半年70%的人工智慧基础设施支出将用于以GPU为中心的设计。因此,高效能运算市场顶级系统的价值正在经历结构性成长,但组件短缺导致价格波动。为了赢得联邦政府的订单,供应商现在正将液冷、光连接模组、零信任韧体和通道重构等服务捆绑销售。

亚洲製药外包中心对GPU加速分子动力学的需求激增

印度、中国和日本的合约研究组织(CRO)正在扩展其DGX级集群,以缩短先导化合物进入临床的路径。三井物产和英伟达宣布将于2024年推出东京1超级计算机,为日本製药企业提供专为生物分子工作负载客製化的H100实例。预计到2030年,印度的CRO产业规模将达到25亿美元,复合年增长率(CAGR)为10.75%。该行业正在将传统方法与人工智慧驱动的靶点识别相结合,从而推动对云端超级运算的需求。研究人员目前正在使用GENESIS软体模拟16亿个原子,并开始探索大规模蛋白质交互作用。这种能力巩固了亚太地区在药物发现外包领域的领先地位,并增强了该地区在全球加速器供应链中的作用。对于高效能运算市场而言,製药工作负载可以起到对抗週期性生产需求波动的反週期作用。

受干旱影响的美国收紧资料中心用水规定

维吉尼亚和马里兰州正在製定法律,要求揭露用水量,而凤凰城正在试验微软的零水冷却技术,每年每个站点可节省1.25亿公升水。公用事业公司现在限制新增兆瓦级电力接入,除非营运商承诺采用液体或后门热交换技术。资本支出可能增加15%至20%,这将挤压高效能运算市场的利润,并推动浸没式或协同空气冷却系统转型。因此,冷板歧管和介电液的供应商正在积极布局,以期获得竞争优势。营运商正在将业务分散到气候更凉爽的地区,但延迟和数据主权政策限制了搬迁选择,因此冷却水资源紧张问题必须透过设计创新来解决,而不是透过搬迁。

细分市场分析

到2024年,硬体将占高效能运算市场规模的55.3%,反映出企业对伺服器、互连技术和平行储存的持续投资。然而,託管服务将以14.7%的复合年增长率成长,随着财务长更倾向于营运支出而非折旧免税额资产,采购逻辑也将随之重塑。系统OEM厂商正在引入计量接口,允许按节点小时收费,从而模拟超大规模云端的经济模式。更快的AI推理管道会带来不可预测的突发需求,促使企业转向避免容量閒置的消费模式。联想的TruScale、戴尔的Apex和HPE的GreenLake将超级运算节点、调度软体和服务等级协定捆绑到一张发票中。供应商正透过提供承包液冷和光模组来缩短部署週期,从而在竞争中脱颖而出,将引进週期从数月缩短至数週。

服务的发展势头表明,未来的价值将围绕编配、优化和安全封装展开,而不是通用主机板的数量。迁移有限元素分析和体学工作负载的公司青睐透明的、按作业计费的方式,这种方式能够将计算使用量与专案津贴和生产里程碑相匹配。合规团队也倾向于託管服务,这种服务将资料保留在本地,但在高峰期允许资料溢出到提供者运营的附加伺服器上。因此,高效能运算市场正在朝着一个光谱发展:裸机购买和完全公共云端租赁位于光谱的两端,而计量收费的本地频谱则位于中间。

到2024年,本地部署基础设施将占据高效能运算市场份额的67.8%,因为关键任务程式码需要确定性的延迟和严格的资料管治。然而,随着加速实例按分钟计费的普及,到2030年,云丛集的复合年增长率将达到11.2%。共用主权框架允许机构将匿名化的工作负载扩展到商业云,同时将敏感资料集保留在本机磁碟上。 CoreWeave与OpenAI签署了一份价值119亿美元、为期五年的合同,这表明专为人工智慧打造的云正在吸引公共和私有客户。系统架构师目前正在设计软体定义架构,以便在不同网站之间无缝地重新部署容器。

展望未来,边缘快取节点、本地液冷机架和租赁GPU丛集的混合部署模式很可能成为主流。 Omnipath和Quantum-2 InfiniBand等互连抽象技术使调度器能够忽略实体位置,将所有加速器视为一个资源池。这种能力使得工作负载的部署不再受拓朴结构的影响,而是由成本、安全性和永续性等因素驱动。因此,高效能运算市场将朝着联合资源网路的方向发展,频宽经济性和数据快频宽费用(而非资本支出)将成为筹资策略的核心。

区域分析

到2024年,北美将占据高效能运算市场40.5%的份额,这得益于联邦机构向旨在促进节能製造的HPC4EI计画投入700万美元。 CHIPS法案激发了超过4,500亿美元的私人晶圆厂投资承诺,为2032年前全球半导体资本支出的28%奠定了基础。易受干旱影响的州立法推行水中性冷却,使新增产能倾向采用浸没式和后门液冷循环技术。超大规模资料中心加速推进内部GPU计划,巩固其区域优势,同时,本地HBM模组的供应却日趋紧张。

亚太地区将以9.3%的复合年增长率领跑,主要得益于主权运算策略和医药外包产业丛集的推动。中国通讯业者计划采购1.7万台人工智慧伺服器,主要来自浪潮和华为,这将为国内订单增加41亿美元。印度的九座PARAM Rudra设施以及即将推出的Krutrim人工智慧晶片将建构一个垂直整合的生态系统。日本正利用东京-1超级电脑加速国内主要药厂的临床候选药物筛检。此类投资将透过资本奖励、本地人才和监管要求的结合,扩大高效能运算的市场规模。

欧洲高效能运算(EuroHPC)发展势头强劲,先后运作了LUMI(386 petaflops)、Leonardo(249 petaflops)和MareNostrum 5(215 petaflops)等超级计算机,其中JUPITER亿百万兆级电脑。欧盟「地平线欧洲」(Horizon Europe)计画投资70亿欧元(约76亿美元)用于高效能运算和人工智慧的研发。卢森堡的共同资助将推动产学合作,共同设计实现数位主权的方案。区域电力价格波动加速了直接液冷技术的应用,并促使人们采用可再生能源来控制营运成本。南美洲和中东/非洲地区虽然仍在发展中,但正在增加对地震建模、气候预测和基因组学的投资,为模组化货柜丛集创造了待开发区机会。

其他福利:

- Excel格式的市场预测(ME)表

- 3个月的分析师支持

目录

第一章 引言

- 研究假设和市场定义

- 调查范围

第二章调查方法

第三章执行摘要

第四章 市场情势

- 市场概览

- 市场驱动因素

- 美国联邦实验室和一级云端服务供应商的 AI/ML 训练工作负载爆炸性成长

- 亚洲製药外包中心对GPU加速分子动力学的需求激增

- 欧盟 Euro-NCAP 2030蓝图强制要求汽车 ADAS 模拟测试

- 国家百万兆级倡议旨在加速中国和印度国产处理器的普及应用

- 市场限制

- 美国干旱频繁的州收紧了对资料中心的用水限制

- 超低延迟边缘运算要求会削弱集中式云端的经济效益。

- 全球HBM3e记忆体短缺将挤压2024-2026年GPU伺服器出货量

- 供应链分析

- 监理展望

- 技术展望(晶片组、光连接模组)

- 波特五力分析

- 供应商的议价能力

- 买方的议价能力

- 新进入者的威胁

- 替代品的威胁

- 竞争对手之间的竞争

第五章 市场规模与成长预测

- 按组件

- 硬体

- 伺服器

- 通用CPU伺服器

- GPU加速伺服器

- 基于 ARM 的伺服器

- 储存系统

- 硬碟阵列

- 基于快闪记忆体的阵列

- 物件储存

- 互连和网路

- InfiniBand

- 乙太网路(25/40/100/400 GbE)

- 客製化/光连接模组

- 软体

- 系统软体(作业系统、丛集管理)

- 中介软体和 RAS 工具

- 平行檔案系统

- 服务

- 专业服务

- 託管式和高效能运算即服务 (HPCaaS)

- 硬体

- 透过部署模式

- 本地部署

- 云

- 杂交种

- 按插入类型(带组件的横切)

- CPU

- 图形处理器

- FPGA

- ASIC/AI加速器

- 透过工业应用

- 政府和国防部

- 学术研究机构

- BFSI

- 製造与汽车工程

- 生命科学与医疗保健

- 能源、石油和天然气

- 其他工业应用

- 按地区

- 北美洲

- 美国

- 加拿大

- 墨西哥

- 欧洲

- 德国

- 英国

- 法国

- 义大利

- 北欧国家(瑞典、挪威、芬兰)

- 其他欧洲地区

- 亚太地区

- 中国

- 日本

- 印度

- 韩国

- 新加坡

- 亚太其他地区

- 南美洲

- 巴西

- 阿根廷

- 其他南美洲

- 中东

- 以色列

- 阿拉伯聯合大公国

- 沙乌地阿拉伯

- 土耳其

- 其他中东地区

- 非洲

- 南非

- 奈及利亚

- 其他非洲地区

- 北美洲

第六章 竞争情势

- 市场集中度

- 策略性倡议(MandA、合资、IPO)

- 市占率分析

- 公司简介

- Advanced Micro Devices, Inc.

- NEC Corporation

- Fujitsu Limited

- Qualcomm Incorporated

- Hewlett Packard Enterprise

- Dell Technologies

- Lenovo Group

- IBM Corporation

- Atos SE/Eviden

- Cisco Systems

- NVIDIA Corporation

- Intel Corporation

- Penguin Computing(SMART Global)

- Inspur Group

- Huawei Technologies

- Amazon Web Services

- Microsoft Azure

- Google Cloud Platform

- Oracle Cloud Infrastructure

- Alibaba Cloud

- Dassault Systemes

第七章 市场机会与未来展望

The high-performance computing market size is valued at USD 55.7 billion in 2025 and is forecast to reach USD 83.3 billion by 2030, advancing at a 7.23% CAGR.

Momentum is shifting from pure scientific simulation toward AI-centric workloads, so demand is moving to GPU-rich clusters that can train foundation models while still running physics-based codes. Sovereign AI programs are pulling government buyers into direct competition with hyperscalers for the same accelerated systems, tightening supply and reinforcing the appeal of liquid-cooled architectures that tame dense power envelopes. Hardware still anchors procurement budgets, yet managed services and HPC-as-a-Service are rising quickly as organizations prefer pay-per-use models that match unpredictable AI demand curves. Parallel market drivers include broader adoption of hybrid deployments, accelerated life-sciences pipelines, and mounting sustainability mandates that force datacenter redesigns.

Global High Performance Computing Market Trends and Insights

The Explosion of AI/ML Training Workloads in U.S. Federal Labs & Tier-1 Cloud Providers

Federal laboratories now design procurements around mixed AI and simulation capacity, effectively doubling addressable peak-performance demand in the high-performance computing market. The Department of Health and Human Services framed AI-ready compute as core to its 2025 research strategy, spurring labs to buy GPU-dense nodes that pivot between exascale simulations and 1-trillion-parameter model training. The Department of Energy secured USD 1.152 billion for AI-HPC convergence in FY 2025. Tier-1 clouds responded with sovereign AI zones that blend FIPS-validated security and advanced accelerators, and industry trackers estimate 70% of first-half 2024 AI-infrastructure spend went to GPU-centric designs. The high-performance computing market consequently enjoys a structural lift in top-end system value, but component shortages heighten pricing volatility. Vendors now bundle liquid cooling, optical interconnects, and zero-trust firmware to win federal awards, reshaping the channel.

Surging Demand for GPU-Accelerated Molecular Dynamics in Asian Pharma Outsourcing Hubs

Contract research organizations in India, China, and Japan are scaling DGX-class clusters to shorten lead molecules' path to the clinic. Tokyo-1, announced by Mitsui & Co. and NVIDIA in 2024, offers Japanese drug makers dedicated H100 instances tailored for biomolecular workloads. India's CRO sector, projected to reach USD 2.5 billion by 2030 at a 10.75% CAGR, layers AI-driven target identification atop classical dynamics, reinforcing demand for cloud-delivered supercomputing. Researchers now push GENESIS software to simulate 1.6 billion atoms, opening exploration for large-protein interactions. That capability anchors regional leadership in outsourced discovery and amplifies Asia-Pacific's pull on global accelerator supply lines. For the high-performance computing market, pharma workloads act as a counter-cyclical hedge against cyclic manufacturing demand.

Escalating Datacenter Water-Usage Restrictions in Drought-Prone U.S. States

Legislation in Virginia and Maryland forces disclosure of water draw, while Phoenix pilots Microsoft's zero-water cooling that saves 125 million liters per site each year. Utilities now limit new megawatt hookups unless operators commit to liquid or rear-door heat exchange. Capital outlays can climb 15-20%, squeezing return thresholds in the high-performance computing market and prompting a shift toward immersion or cooperative-air systems. Suppliers of cold-plate manifolds and dielectric fluids therefore gain leverage. Operators diversify sites into cooler climates, but latency and data-sovereignty policies constrain relocation options, so design innovation rather than relocation must resolve the cooling-water tension.

Other drivers and restraints analyzed in the detailed report include:

- Mandatory Automotive ADAS Simulation Compliance in EU EURO-NCAP 2030 Roadmap

- National Exascale Initiatives Driving Indigenous Processor Adoption in China & India

- Global Shortage of HBM3e Memory Constraining GPU Server Shipments 2024-26

For complete list of drivers and restraints, kindly check the Table Of Contents.

Segment Analysis

Hardware accounted for 55.3% of the high-performance computing market size in 2024, reflecting continued spend on servers, interconnects, and parallel storage. Managed offerings, however, posted a 14.7% CAGR and reshaped procurement logic as CFOs favor OPEX over depreciating assets. System OEMs embed metering hooks so clusters can be billed by node-hour, mirroring hyperscale cloud economics. The acceleration of AI inference pipelines adds unpredictable burst demand, pushing enterprises toward consumption models that avoid stranded capacity. Lenovo's TruScale, Dell's Apex, and HPE's GreenLake now bundle supercomputing nodes, scheduler software, and service-level agreements under one invoice. Vendors differentiate through turnkey liquid cooling and optics that cut deployment cycles from months to weeks.

Services' momentum signals that future value will center on orchestration, optimization, and security wrappers rather than on commodity motherboard counts. Enterprises migrating finite-element analysis or omics workloads appreciate transparent per-job costing that aligns compute use with grant funding or manufacturing milestones. Compliance teams also prefer managed offerings that keep data on-premise yet allow peaks to spill into provider-operated annex space. The high-performance computing market thus moves toward a spectrum where bare-metal purchase and full public-cloud rental are endpoints, and pay-as-you-go on customer premises sits in the middle.

On-premise infrastructures held 67.8% of the high-performance computing market share in 2024 because mission-critical codes require deterministic latency and tight data governance. Yet cloud-resident clusters grow at 11.2% CAGR through 2030 as accelerated instances become easier to rent by the minute. Shared sovereignty frameworks let agencies keep sensitive datasets on local disks while bursting anonymized workloads to commercial clouds. CoreWeave secured a five-year USD 11.9 billion agreement with OpenAI, signalling how specialized AI clouds attract both public and private customers. System architects now design software-defined fabrics that re-stage containers seamlessly between sites.

Hybrid adoption will likely dominate going forward, blending edge cache nodes, local liquid-cooled racks, and leased GPU pods. Interconnect abstractions such as Omnipath or Quantum-2 InfiniBand allow the scheduler to ignore physical location, treating every accelerator as a pool. That capability makes workload placement a policy decision driven by cost, security, and sustainability rather than topology. As a result, the high-performance computing market evolves into a network of federated resources where procurement strategy centers on bandwidth economics and data-egress fees rather than capex.

The High Performance Computing Market is Segmented by Component (Hardware, Software and Services), Deployment Mode (On-Premise, Cloud, Hybrid), Industrial Application (Government and Defense, Academic and Research Institutions, BFSI, Manufacturing and Automotive Engineering, and More), Chip Type (CPU, GPU, FPGA, ASIC / AI Accelerators) and Geography. The Market Forecasts are Provided in Terms of Value (USD).

Geography Analysis

North America commanded 40.5% of the high-performance computing market in 2024 as federal agencies injected USD 7 million into the HPC4EI program aimed at energy-efficient manufacturing. The CHIPS Act ignited over USD 450 billion of private fab commitments, setting the stage for 28% of global semi capex through 2032. Datacenter power draw may climb to 490 TWh by 2030; drought-prone states therefore legislate water-neutral cooling, tilting new capacity toward immersion and rear-door liquid loops. Hyperscalers accelerate self-designed GPU projects, reinforcing regional dominance but tightening local supply of HBM modules.

Asia-Pacific posts the strongest 9.3% CAGR, driven by sovereign compute agendas and pharma outsourcing clusters. China's carriers intend to buy 17,000 AI servers, mostly from Inspur and Huawei, adding USD 4.1 billion in domestic orders. India's nine PARAM Rudra installations and upcoming Krutrim AI chip build a vertically integrated ecosystem. Japan leverages Tokyo-1 to fast-track clinical candidate screening for large domestic drug makers. These investments enlarge the high-performance computing market size by pairing capital incentives with local talent and regulatory mandates.

Europe sustains momentum through EuroHPC, operating LUMI (386 petaflops), Leonardo (249 petaflops), and MareNostrum 5 (215 petaflops), with JUPITER poised as the region's first exascale machine. Horizon Europe channels EUR 7 billion (USD 7.6 billion) into HPC and AI R&D. Luxembourg's joint funding promotes industry-academia co-design for digital sovereignty. Regional power-price volatility accelerates adoption of direct liquid cooling and renewable matching to control operating costs. South America, the Middle East, and Africa are nascent but invest in seismic modeling, climate forecasting, and genomics, creating greenfield opportunities for modular containerized clusters.

- Advanced Micro Devices, Inc.

- NEC Corporation

- Fujitsu Limited

- Qualcomm Incorporated

- Hewlett Packard Enterprise

- Dell Technologies

- Lenovo Group

- IBM Corporation

- Atos SE / Eviden

- Cisco Systems

- NVIDIA Corporation

- Intel Corporation

- Penguin Computing (SMART Global)

- Inspur Group

- Huawei Technologies

- Amazon Web Services

- Microsoft Azure

- Google Cloud Platform

- Oracle Cloud Infrastructure

- Alibaba Cloud

- Dassault Systemes

Additional Benefits:

- The market estimate (ME) sheet in Excel format

- 3 months of analyst support

TABLE OF CONTENTS

1 INTRODUCTION

- 1.1 Study Assumptions and Market Definition

- 1.2 Scope of the Study

2 RESEARCH METHODOLOGY

3 EXECUTIVE SUMMARY

4 MARKET LANDSCAPE

- 4.1 Market Overview

- 4.2 Market Drivers

- 4.2.1 The Explosion of AI/ML Training Workloads in U.S. Federal Labs and Tier-1 Cloud Providers

- 4.2.2 Surging Demand for GPU-Accelerated Molecular Dynamics in Asian Pharma Outsourcing Hubs

- 4.2.3 Mandatory Automotive ADAS Simulation Compliance in EU EURO-NCAP 2030 Roadmap

- 4.2.4 National Exascale Initiatives Driving Indigenous Processor Adoption in China and India

- 4.3 Market Restraints

- 4.3.1 Escalating Datacenter Water-Usage Restrictions in Drought-Prone U.S. States

- 4.3.2 Ultra-Low-Latency Edge Requirements Undermining Centralized Cloud Economics

- 4.3.3 Global Shortage of HBM3e Memory Constraining GPU Server Shipments 2024-26

- 4.4 Supply-Chain Analysis

- 4.5 Regulatory Outlook

- 4.6 Technological Outlook (Chiplets, Optical Interconnects)

- 4.7 Porter's Five Forces Analysis

- 4.7.1 Bargaining Power of Suppliers

- 4.7.2 Bargaining Power of Buyers

- 4.7.3 Threat of New Entrants

- 4.7.4 Threat of Substitutes

- 4.7.5 Intensity of Competitive Rivalry

5 MARKET SIZE AND GROWTH FORECASTS (VALUES)

- 5.1 By Component

- 5.1.1 Hardware

- 5.1.1.1 Servers

- 5.1.1.1.1 General-Purpose CPU Servers

- 5.1.1.1.2 GPU-Accelerated Servers

- 5.1.1.1.3 ARM-Based Servers

- 5.1.1.2 Storage Systems

- 5.1.1.2.1 HDD Arrays

- 5.1.1.2.2 Flash-Based Arrays

- 5.1.1.2.3 Object Storage

- 5.1.1.3 Interconnect and Networking

- 5.1.1.3.1 InfiniBand

- 5.1.1.3.2 Ethernet (25/40/100/400 GbE)

- 5.1.1.3.3 Custom/Optical Interconnects

- 5.1.2 Software

- 5.1.2.1 System Software (OS, Cluster Mgmt)

- 5.1.2.2 Middleware and RAS Tools

- 5.1.2.3 Parallel File Systems

- 5.1.3 Services

- 5.1.3.1 Professional Services

- 5.1.3.2 Managed and HPC-as-a-Service (HPCaaS)

- 5.1.1 Hardware

- 5.2 By Deployment Mode

- 5.2.1 On-premise

- 5.2.2 Cloud

- 5.2.3 Hybrid

- 5.3 By Chip Type (Cross-Cut with Component)

- 5.3.1 CPU

- 5.3.2 GPU

- 5.3.3 FPGA

- 5.3.4 ASIC / AI Accelerators

- 5.4 By Industrial Application

- 5.4.1 Government and Defense

- 5.4.2 Academic and Research Institutions

- 5.4.3 BFSI

- 5.4.4 Manufacturing and Automotive Engineering

- 5.4.5 Life Sciences and Healthcare

- 5.4.6 Energy, Oil and Gas

- 5.4.7 Other Industry Applications

- 5.5 By Geography

- 5.5.1 North America

- 5.5.1.1 United States

- 5.5.1.2 Canada

- 5.5.1.3 Mexico

- 5.5.2 Europe

- 5.5.2.1 Germany

- 5.5.2.2 United Kingdom

- 5.5.2.3 France

- 5.5.2.4 Italy

- 5.5.2.5 Nordics (Sweden, Norway, Finland)

- 5.5.2.6 Rest of Europe

- 5.5.3 Asia-Pacific

- 5.5.3.1 China

- 5.5.3.2 Japan

- 5.5.3.3 India

- 5.5.3.4 South Korea

- 5.5.3.5 Singapore

- 5.5.3.6 Rest of Asia-Pacific

- 5.5.4 South America

- 5.5.4.1 Brazil

- 5.5.4.2 Argentina

- 5.5.4.3 Rest of South America

- 5.5.5 Middle East

- 5.5.5.1 Israel

- 5.5.5.2 United Arab Emirates

- 5.5.5.3 Saudi Arabia

- 5.5.5.4 Turkey

- 5.5.5.5 Rest of Middle East

- 5.5.6 Africa

- 5.5.6.1 South Africa

- 5.5.6.2 Nigeria

- 5.5.6.3 Rest of Africa

- 5.5.1 North America

6 COMPETITIVE LANDSCAPE

- 6.1 Market Concentration

- 6.2 Strategic Moves (MandA, JVs, IPOs)

- 6.3 Market Share Analysis

- 6.4 Company Profiles {(includes Global level Overview, Market level overview, Core Segments, Financials as available, Strategic Information, Market Rank/Share for key companies, Products and Services, and Recent Developments)}

- 6.4.1 Advanced Micro Devices, Inc.

- 6.4.2 NEC Corporation

- 6.4.3 Fujitsu Limited

- 6.4.4 Qualcomm Incorporated

- 6.4.5 Hewlett Packard Enterprise

- 6.4.6 Dell Technologies

- 6.4.7 Lenovo Group

- 6.4.8 IBM Corporation

- 6.4.9 Atos SE / Eviden

- 6.4.10 Cisco Systems

- 6.4.11 NVIDIA Corporation

- 6.4.12 Intel Corporation

- 6.4.13 Penguin Computing (SMART Global)

- 6.4.14 Inspur Group

- 6.4.15 Huawei Technologies

- 6.4.16 Amazon Web Services

- 6.4.17 Microsoft Azure

- 6.4.18 Google Cloud Platform

- 6.4.19 Oracle Cloud Infrastructure

- 6.4.20 Alibaba Cloud

- 6.4.21 Dassault Systemes

7 MARKET OPPORTUNITIES AND FUTURE OUTLOOK

- 7.1 White-space and Unmet-need Assessment